Each month, this newsletter is read by over 45K+ operators, investors, and tech / product leaders and executives. If you found value in this newsletter, please consider supporting via subscribing or upgrading. This helps support independent AI analysis. If you are technical, looking for new opportunities, and want to connect, my DMs are open on Substack.

The biggest story about GPT-5 isn’t what it can do, but what it means for OpenAI’s business.

Here’s the most important headline of today: OpenAI will retire all existing models (4o, O1, O3, etc.) and unify them under a single GPT-5 umbrella.

That ends the era where users could directly control which model ran their queries, a shift Sam Altman telegraphed back in February.

Effectively, we’ve entered the “automatic transmission” era of AI, where manually picking a model is like driving stick. For most users, that’s a good thing: few can tell the difference between GPT-4o and O3 anyway.

But this is also a major business win for OpenAI. GPT-5 finally gives the company the mechanism to manage inference costs dynamically and keep COGS low.

It also sets off important second-order effects:

It saves OpenAI a ton of money by optimizing compute allocation and preventing abuse.

It increases platform power by turning model routing into a black box—better positioning OpenAI to move toward usage-based pricing.

And it puts immense pressure on Anthropic to follow OpenAI’s path on open source

In this post, I’ll unpack each of these three strategic implications from the GPT-5 release.

Cost Savings for OpenAI

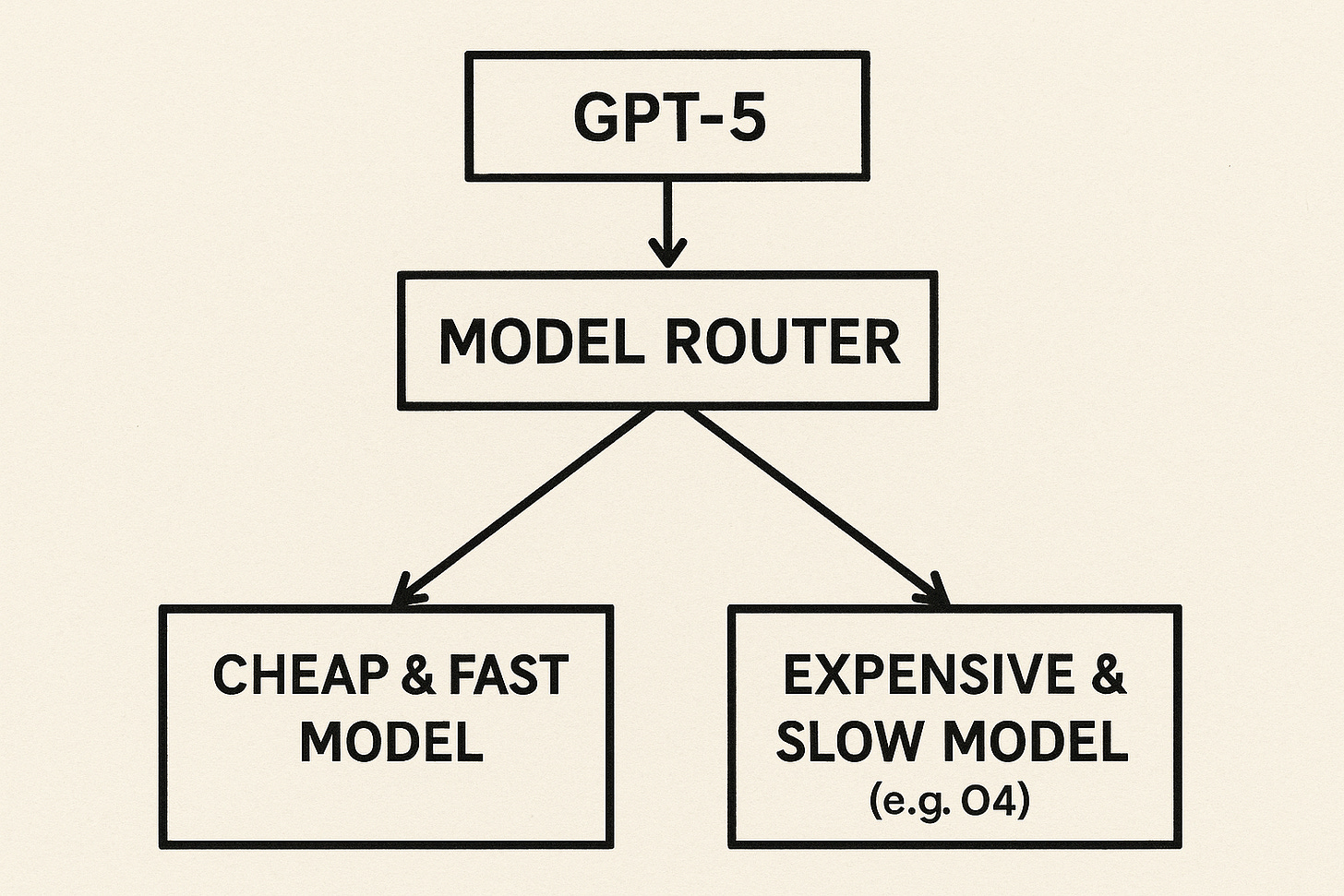

Unifying models under GPT-5 allows OpenAI to fully control which model(s) to use to fulfill the user query, via a component called model router.

Just like humans that have system 1 (fast, intuitive) and system 2 (slow, deliberate) thinking modes, the new GPT-5 architecture emulates that. Here is a direct quote from system card).

GPT‑5 is a unified system … a real-time router that quickly decides which model to use based on conversation type, complexity, tool needs, and explicit intent (for example, if you say “think hard about this” in the prompt).

This obviously is great for OpenAI’s cost optimization initiatives, because it can now precisely allocate compute to the complexity of user’s question, e.g. preventing people from spamming over-powered models (e.g. O3) on basic homework questions.

Now, model router itself becomes the goal / and a choke point to optimize. The better the model router, the greater the cost savings for OpenAI (by correctly allocating just enough compute to every request).

Before, OpenAI didn’t have this mechanism. By exposing the “raw” model, it opened itself up to abuse scenarios.

More Platform Power to OpenAI

The flip side: routing adds opacity.

There’s now an extra layer of indirection between the prompt and the output, and the routing decisions are non-deterministic.

With O3, running a query a thousand times meant hitting the same model every time. That’s not guaranteed with GPT-5.

Also, it’s unclear if the future improvements to GPT-5 will be shipped to the:

model router layer

the models that sit behind the router

or how much test time compute GPT-5 allocates behind the scenes.

GPT-5 notably has a new parameter called `reasoning_effort` that has three modes: low, medium, and high. It’s not clear what “high” means now, versus what “high” will mean in 3 months.

This means we are now more reliant on the inner workings & routing algorithm to get good performance out of GPT-5.

OpenAI’s model router literally controls your performance, kind of like how Instagram’s algorithm controls your posts’ exposure.

Pressure on Anthropic

GPT-5 puts tremendous pressure on Anthropic to have an answer soon, especially on the open source front.

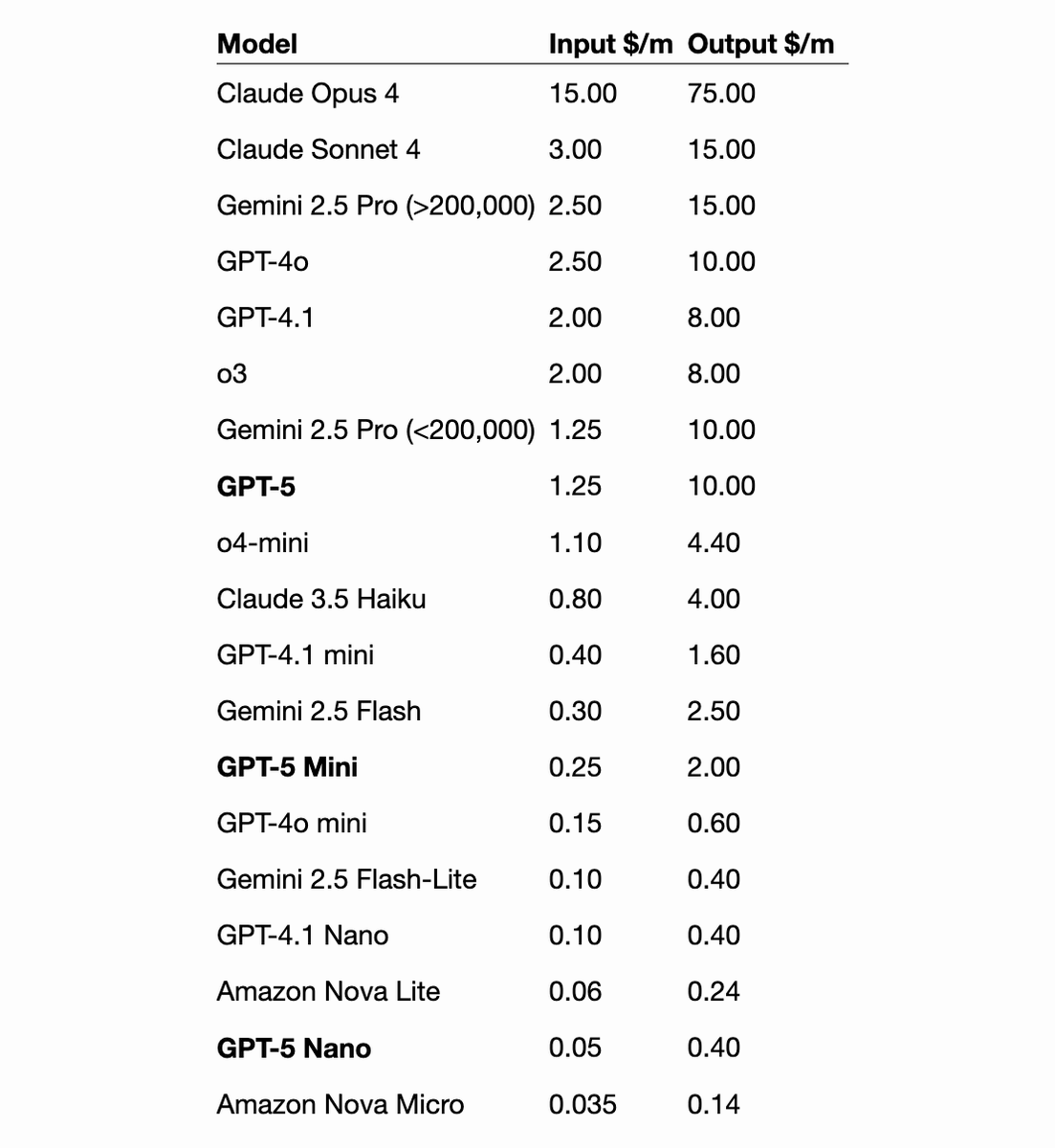

GPT-5 is 33% cheaper than Sonnet4, while being just as good as Sonnet4 on tool calling (based on my vibe checks). Notably, it's better at frontend coding than Sonnet4, and has a better “design sense”.

GPT-5-nano is 5 cents per million tokens, and is on par with GPT-4o.

I expect the combination of GPT-120b-OSS (which is at least 5x faster and 80% cheaper than Anthropic models) plus GPT-5 to be a formidable substitute for Anthropic’s models, whether consumed through CLI (e.g. Claude Code)or via IDE (e.g. Cursor).

Now, it’s Anthropic’s turn to:

either lower Sonnet4’s price (which dents their enterprise API momentum), and/or

release a new Sonnet soon

About Me

I write the "Enterprise AI Trends" newsletter (read by over 45K readers worldwide per month), and help companies build the right AI solutions (https://ainativefirm.com).

Previously, I was a Generative AI architect at AWS, an early PM at Alexa, and the Head of Volatility Index Trading at Morgan Stanley. I studied CS and Math at Stanford (BS, MS).